As part of the Research IT Infrastrucure on the Venusberg Campus, the KWI administrates the management systems and storage capabilities of the Core Facilites of the Medical Faculty.

The setup of the the corresponding IT infrastructure is designed to fulfil the following requirements

Rapid transfer of raw data from the acquisition computers to a central storage unit to assure uninterrupted processing of samples on the instrumentation.

Rapid and dynamic exchange of data among the processing and computational units i.e. workstations with dedicated software packages and the Core Unit Bioinformatics CUBA

Easy and structured exchange of processed data with all users of the Bonn Technology Campus

Storage Systems and Data protection

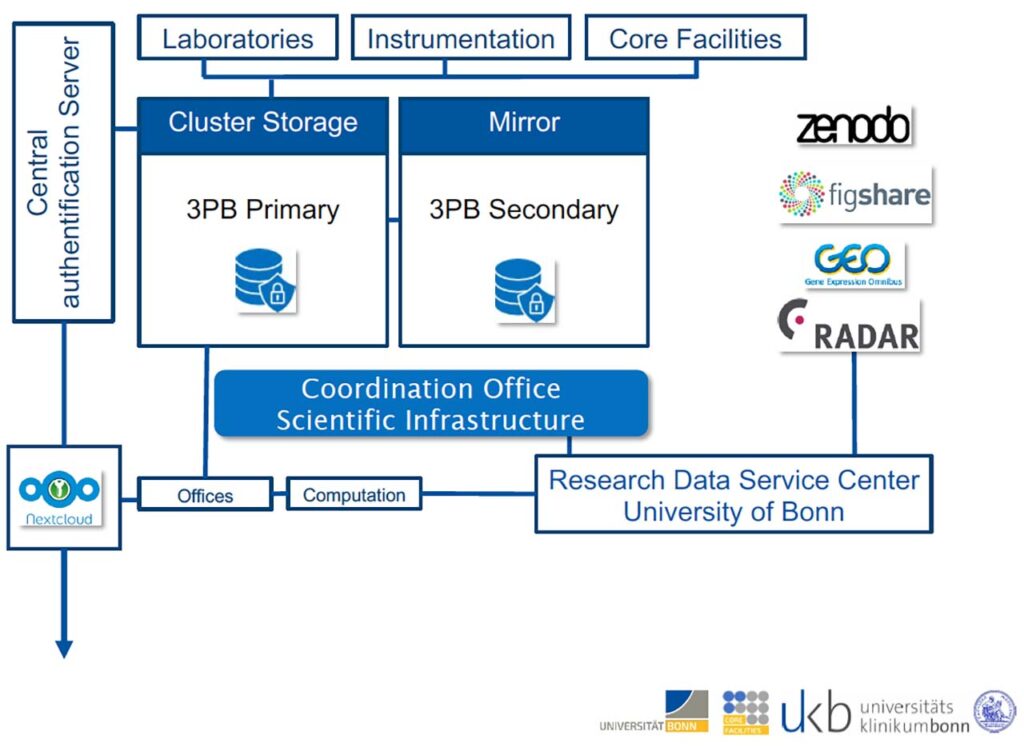

The primary storage for the scientific data is based on a 3 Petabyte DELL EMC Isilon storage array. Configuration is such that storage is scalable and can be easily expanded. An identical system is placed at a second certified server room to ensure reliability of operation. Servers are hosted in certified Server rooms, that provide racks, climate and an uninterrupted power supply. Maintenance and administrative operations are run by the University Hospital IT (uk-it). Data are stored according to the German law and the DSGVO. The rules for data protection are permanently updated.

User management is handled by the Koordinationsstelle wissenschaftliche Infrastruktur of the Medical Faculty of the University of Bonn and is based on a Lightweight Directory Access Protocol (LDAP) as a detailed identification management system (Single Sign On, SSO). All data can be clearly identified by user ID, group and project they belong to and access to data can be regulated and controlled accordingly.

Data distribution and workspace

Users of the Bonn Technology Campus will be provided an individual space of one Terabyte. Users of the Core facilities (-Omics, Microscopy, Flow Cytometry, Bioinformatics) will be provided space to store and share data among the user base defined by the centralized authentication server.

Projects can apply for a dedicated space to store, organize and share data. An initial storage capacity can be provided to collect all data during the runtime of the projects and can be expanded upon request. System administrators for the project space will be appointed and will maintain data structure according to the needs of the different projects. System administrators will assure that data are already structured in a way that mimics the final archiving structure of data sets and can therefore ease the transfer to the long term storage unit.

The Dell EMC Isilon Cluster is placed in a research network of the Universities IT infrastructure that can be accessed by standard data exchange protocols. Internet based interfaces to access data are built into the Dell EMC Isilon system and allow access via a cloud structure based on the Open Source Nextcloud package. Web based access to the data sets is meant to simplify interaction and collaboration of users and groups within the CRC and associated research groups.

Long Term Storage

The Digital Science Center (DiCe) of the university will aid in setting up data management plans to finally transfer relevant data into a long term storage format that can be stored on a IBM Spectrum Protect (ISP) tape system provided by the university. All datasets will be published or archived according to the FAIR data principles. Persistent identifiers and metadata will ensure findability and semantic links between datasets and related publications. Templates including metadata like project number, experiment, documentation, version and data format will be set up in collaboration with the DiCe and researchers will be instructed how to use them. The RADAR metadata scheme will serve as basis for these templates

The underlying IT infrastructure is summarized in the following scheme:

Campus-wide Management system for Core Facilities

The Coordination Office for Scientific Infrastructure manages a centralized core facility management system (https://ppms.eu/uni-bonn/login/?pf=3). The system is built on a range of specialized modules. Booking modules are mainly important for the core facilities to handle autonomous usage of their instrumentation and training. The modules include:

- Single sign on access to all facilities

- Utilities for each facility and core manager to control their own services/capabilities/instruments

- Booking and Ordering resources (instruments, services, products)

- Advanced booking rules, access policy, pricing control (expert, novice, business hrs, after hrs)

- Integrated and detailed reporting (booked time, used time on a user, group or instrument basis, budget and income)

- Invoice generation

- Graduated user roles (researcher, PI, facility admin, institutional admin, superadmin)

Core Managers have tools to manage access rules for their individual facility, have integrated reporting and invoicing modules and an easy way to monitor instrument utilization. Users will have a single login to access the infrastructure, can switch between facilities from a single access point. Users can only access instruments/services that they have been authorized for, but can see all offered resources.

Reports for the funding agencies, based on resource utilization, proper allocation of grant money and overall scientific performance can be prepared in detail anytime. These reports are used by the Deans Office of the medical faculty and Deans for evaluation and are supplemented by cost/value analysis, information of interaction among the client users, scientific supervisors and platform consultants within the main areas of research. The management systems therefore support the faculty in decision making processes for resource purchases and surveillance of the platform performance and general strategic planning.